Statistical distributions exhibit a vast array of forms and characteristics, presenting a significant challenge in the field of inferential statistics. This diversity complicates the process of making accurate predictions and drawing generalizations from data. The complexity arises from the fact that each distribution type has its unique properties, making it difficult to apply a one-size-fits-all approach to statistical analysis.

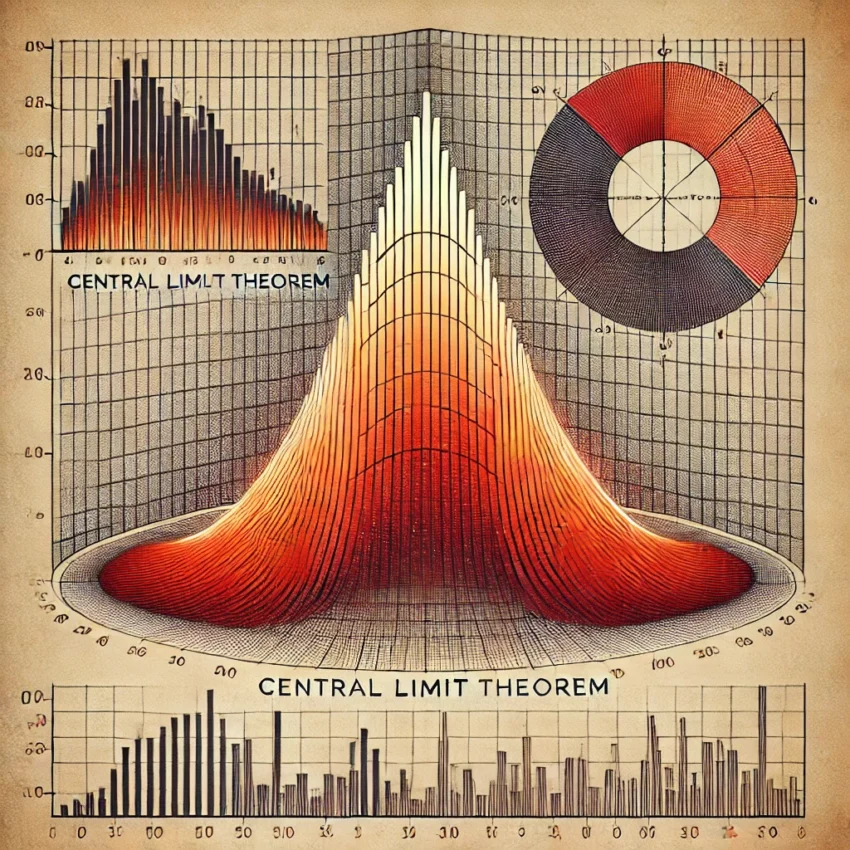

However, amidst this complexity, the central limit theorem (CLT) emerges as a powerful and indispensable tool. The CLT provides a robust framework that simplifies many statistical procedures and enables researchers to make reliable inferences across a wide range of scenarios, regardless of the underlying distribution of the population.

The Central Limit Theorem (CLT) stands as a cornerstone of statistics and probability. Its impact extends beyond theoretical statistics, influencing practical applications across diverse sectors such as medicine, economics, psychology, and engineering.

The Central Limit Theorem states that the distribution of sample means from a sufficiently large number of samples will approximate a normal (Gaussian) distribution, regardless of the population’s original distribution. This principle remains valid even when the original distribution is not normal.

The conditions are:

- Independence: The elements of the sample must be selected independently.

- Sample size: The CLT becomes more accurate as the sample size increases. A commonly accepted number is n ≥ 30.

- Population: Even if the population is not normally distributed, the CLT can be applied to samples of sufficient size.

The distribution of the sample mean follows:

![]()

The variance of the sample mean is:

![]()

ES is the standard error of the sample mean:

![]()

Variance and standard error are closely related but distinct concepts. The standard error, defined as σ/√n (where σ is the population standard deviation and n is the sample size), measures how precisely the sample mean estimates the population mean.

The variance of the sample mean quantifies the spread of sample means around the population mean. At the same time, the standard error indicates the precision of the population mean estimate derived from the sample mean. Importantly, the standard error is the square root of the sample mean’s variance, expressed in the same units as the sample mean. In contrast, variance is expressed in squared units.

The formula incorporates sample size (n) in the denominator, which reduces the error as the sample size grows. Standard error is crucial in inferential statistics, where researchers aim to estimate the population mean from a sample.

The Central Limit Theorem has several important implications:

- The CLT provides the foundation for inferential statistics—the branch of statistics concerned with drawing conclusions about a population from sample data. It enables us to make inferences about population parameters such as the mean, proportion of a specific characteristic, or other statistics using only samples rather than the entire population.

- A key strength of the CLT is its applicability to populations with any distribution—be it exponential, binomial, or otherwise. This versatility is crucial in real-world scenarios where data distributions are often unknown, skewed, or non-normal.

- The CLT simplifies estimates and inferences by eliminating the need to know the population’s exact distribution, thus reducing computational complexity.

- In many practical applications, such as medicine, understanding the uncertainty of measurements and estimates is crucial. The CLT provides a mathematical framework to quantify this uncertainty. It demonstrates that estimate precision improves with larger sample sizes, as the variance of the sample mean distribution is inversely proportional to the sample size.

- The CLT enables researchers to simplify statistical models by assuming a normal distribution of sample means, even when the raw data aren’t normal. This simplification makes models more manageable, particularly for large datasets or complex systems.

The Central Limit Theorem is crucial for inferential statistics. It provides a theoretical framework justifying the use of normal distribution in various practical applications—even when the underlying data distribution is unknown or non-normal. This theory simplifies the work of statisticians and researchers while enabling accurate estimates and predictions under uncertain conditions. Its universality and robustness make it an indispensable tool for science, engineering, economics, and any discipline requiring data analysis.

The following Python program illustrates how the Central Limit Theorem (CLT) can transform a non-normal distribution (in this case, exponential) into a normal distribution. This is achieved by extracting samples and calculating their means.

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# Step 1: Generate a non-normal distribution

# We will use the exponential distribution which is commonly used to model waiting times.

# This distribution is highly skewed, making it a good candidate for demonstrating the CLT.

np.random.seed(42) # For reproducibility

population_size = 100000 # Size of the population

exponential_distribution = np.random.exponential(scale=2, size=population_size)

# Step 2: Visualize the original non-normal distribution

plt.figure(figsize=(10, 6))

sns.histplot(exponential_distribution, kde=True, bins=50, color='blue')

plt.title('Original Non-Normal Distribution (Exponential)')

plt.xlabel('Value')

plt.ylabel('Frequency')

plt.show()

# Step 3: Apply the Central Limit Theorem

# We will take multiple samples from the non-normal distribution, compute the sample means, and observe their distribution.

sample_size = 50 # Size of each sample

num_samples = 1000 # Number of samples

sample_means = [] # To store the means of each sample

# Generate samples and compute their means

for _ in range(num_samples):

sample = np.random.choice(exponential_distribution, size=sample_size, replace=True)

sample_means.append(np.mean(sample))

# Step 4: Visualize the distribution of sample means

plt.figure(figsize=(10, 6))

sns.histplot(sample_means, kde=True, bins=50, color='orange')

plt.title('Distribution of Sample Means (CLT in Action)')

plt.xlabel('Sample Mean')

plt.ylabel('Frequency')

plt.show()

# Step 5: Compare the distribution of sample means with a normal distribution

# We will overlay a normal distribution with the same mean and standard deviation

mean_of_means = np.mean(sample_means)

std_of_means = np.std(sample_means)

# Plotting the sample means

plt.figure(figsize=(10, 6))

sns.histplot(sample_means, kde=True, bins=50, color='orange', label='Sample Means Distribution')

# Plotting the normal distribution

x = np.linspace(min(sample_means), max(sample_means), 100)

plt.plot(x, (1/(std_of_means * np.sqrt(2 * np.pi))) * np.exp(-0.5 * ((x - mean_of_means) / std_of_means) ** 2), color='red', label='Normal Distribution')

plt.title('Comparison of Sample Means with Normal Distribution')

plt.xlabel('Sample Mean')

plt.ylabel('Density')

plt.legend()

plt.show()

# Step 6: Conclusion

# The distribution of sample means is approximately normal, demonstrating the Central Limit Theorem.

Program Description:

First, we generate a non-normal distribution—specifically, an exponential distribution—using NumPy’s np.random.exponential() function. We then create an initial graph to illustrate that this distribution is indeed not normal. Next, we randomly draw 1,000 samples, each containing 50 numbers, from the exponential distribution. We calculate the mean of each sample, store these means in the variable sample_means, and visualize the results in a second graph. Finally, in the third graph, we compare a normal distribution to our obtained distribution of sample means.